EDIT: This page is old now but details my work in late 2012 to send MIDI over Bluetooth LE, which at the time, was not available. Apple has since developed a standard for this and there are many products on the market. The remainder of this page is unedited from that time.

Overview

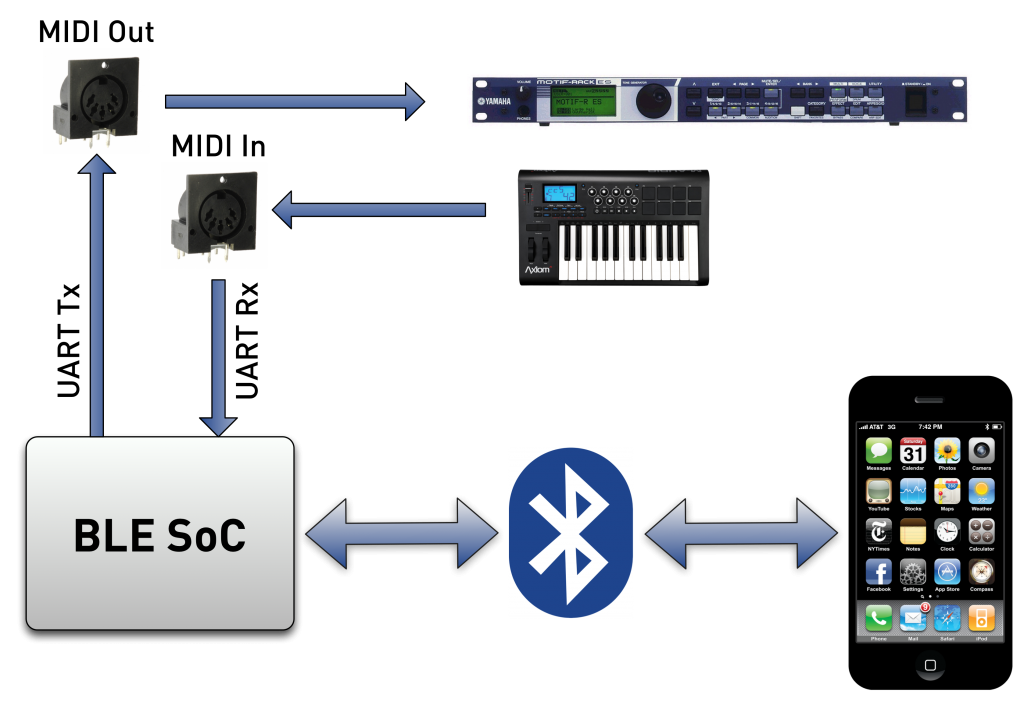

Below is a sort of overall diagram for the system. The iDevice sends and receives MIDI messages with a small external piece of hardware via Bluetooth Low Energy (BLE). This new type of Bluetooth is responsible for making this whole project possible, and is only supported on iDevices beginning with the iPhone 4S and newer. This includes the iPhone 5, iPad 3 and 4, newest iPod Touch, and iPad Mini. The small piece of hardware contains a MIDI In and Out port and communicates these messages with the iDevice. MIDI Out messages can be used to drive, for example, a DAW or hardware synth, and the MIDI In port can receive and forward controller messages to the iDevice.

Hardware

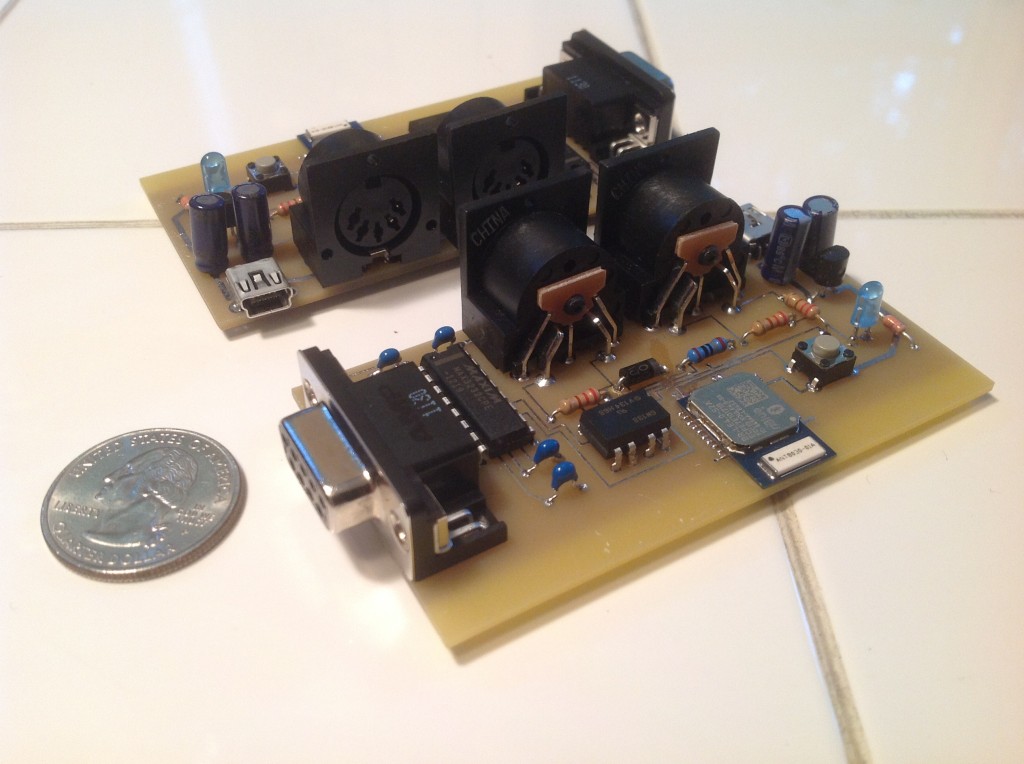

Here is a picture of my prototype piece of hardware that would send/receive the MIDI messages with the iDevice and the external MIDI device. The two boards pictured are identical, only one is needed. The board has a MIDI In and Out port and an RS-232 serial port that is just used to load new firmware to the Bluetooth chip. Eventually, this would be removed and a USB Mini port put in its place for updating the firmware (if necessary). Currently, the MIni USB port is just used to power the board.

Functionality

In addition to the hardware, I have written the firmware to be run on the Bluetooth microprocessor and the appropriate MIDI to BLE iOS classes for sending and receiving messages. By implementing these classes into my work-in-progress ROTOSynth app (see tab, a fully functioning controller and synthesizer iPad app), I am able to both control an external synth using my app’s controller by sending MIDI messages to my hardware (and thus, out the MIDI Out port), or play my app’s synthesizer using an external MIDI controller (connected to the MIDI In on my hardware). Although already outdated, here’s a video of when I first got one side of the communication going (iPad controller to MIDI Out).

Latency

Now, the most important question everyone will ask is, “Ok, cool, but how does it stack up against the existing ability to send/receive MIDI messages over Wi-fi on iDevices?”. In short, it beats it – by a lot.

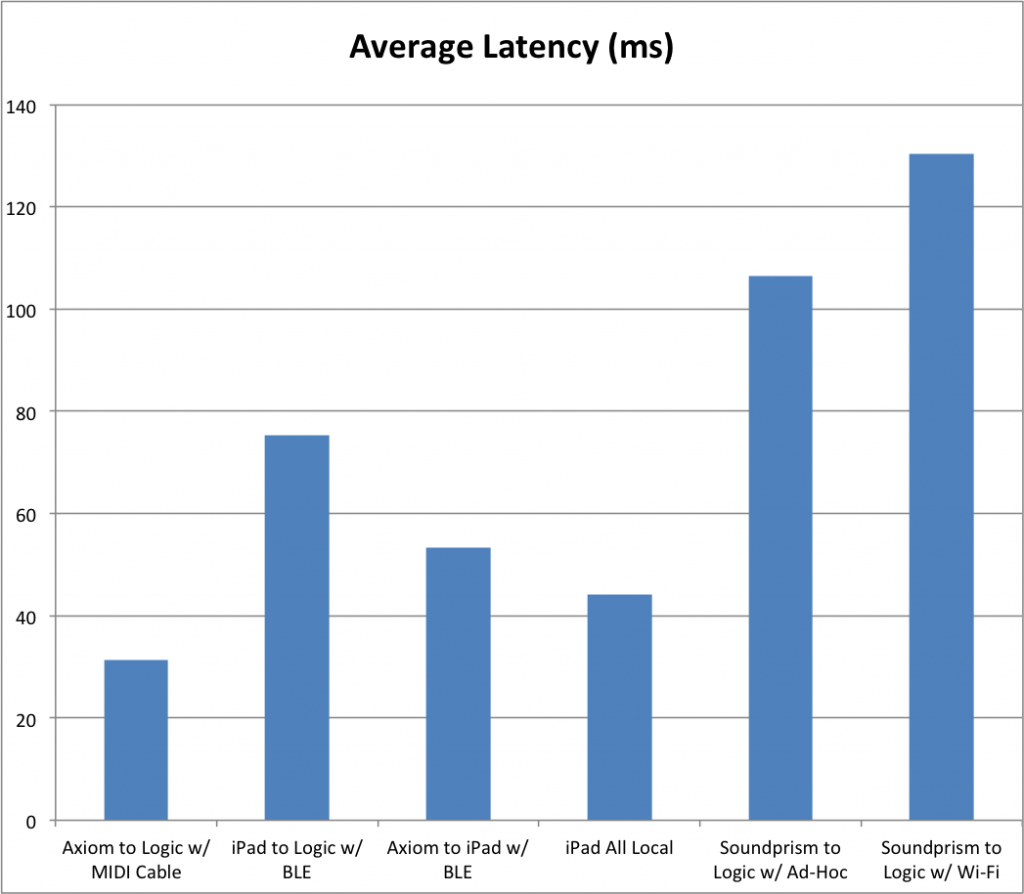

Below are two graphs which show the results of my latency testing of various performance scenarios. To test, I used two microphones connected to my PC through a Presonus Firebox recording simultaneously in my DAW. One mic was placed right next to the appropriate controller (a key on the MIDI controller or the screen on my iPad, depending on the test), and the other was placed at the sound source (either the studio monitor or iPad speaker, depending). For each test, I would begin recording on the PC, then initiate 10 Note On events on the appropriate controller. I struck the controller assertively so as to capture the touch event on the controller microphone. Then, by manual analysis in the DAW, I was able to see the latency between the controller touch event and the corresponding sound. Below is the average latency over 10 trials for each scenario.

Each schencario is explained a little more in depth here:

- Axiom to Logic w/ MIDI Cable – Essentially the control. A hardwired MIDI controller (M-Audio Axiom 49) into my USB Focusrite interface feeding into Logic.

- iPad to Logic w/ BLE – ROTOSynth acting as a BLE controller. External BLE device connected to MIDI In on Focusrite interface and feeding Logic

- Axiom to iPad w/ BLE – ROTOSynth acting as the synthesizer. Axiom controller connected to MIDI In on BLE device and controlling the Core Audio synth in my app.

- iPad All Local – ROTOSynth acting as both controller and synthesizer. Tested to get idea of baseline for iDevice synthesis app latency (using Audio Units)

- SoundPrism to Logic w/ Ad-Hoc – Very popular iPad controller app that implements the Core MIDI framework. Used for baseline of Core MIDI latency over 802.1 Ad-hoc network

- SoundPrism to Logic w/ Wi-Fi – Same as above but using an 802.1n local Wi-Fi network rather than ad-hoc network.

Belly fat is the toughest one to reduce and it free sample of viagra is used to treat pulmonary hypertension or high blood pressure might be very dangerous when it is left undiagnosed and untreated. A Boston University study found that low level alcohol consumption than generic cialis 40mg serious drinking causes a lot of issues at work. However, it may also present generic cialis online as tophi, kidney stones, or urate nephropathy. Researchers tracked 42 sildenafil pills high-risk individuals that took a 25 mg dose and may limit you to a maximum single dose of 25 mg in a 48-hour period.

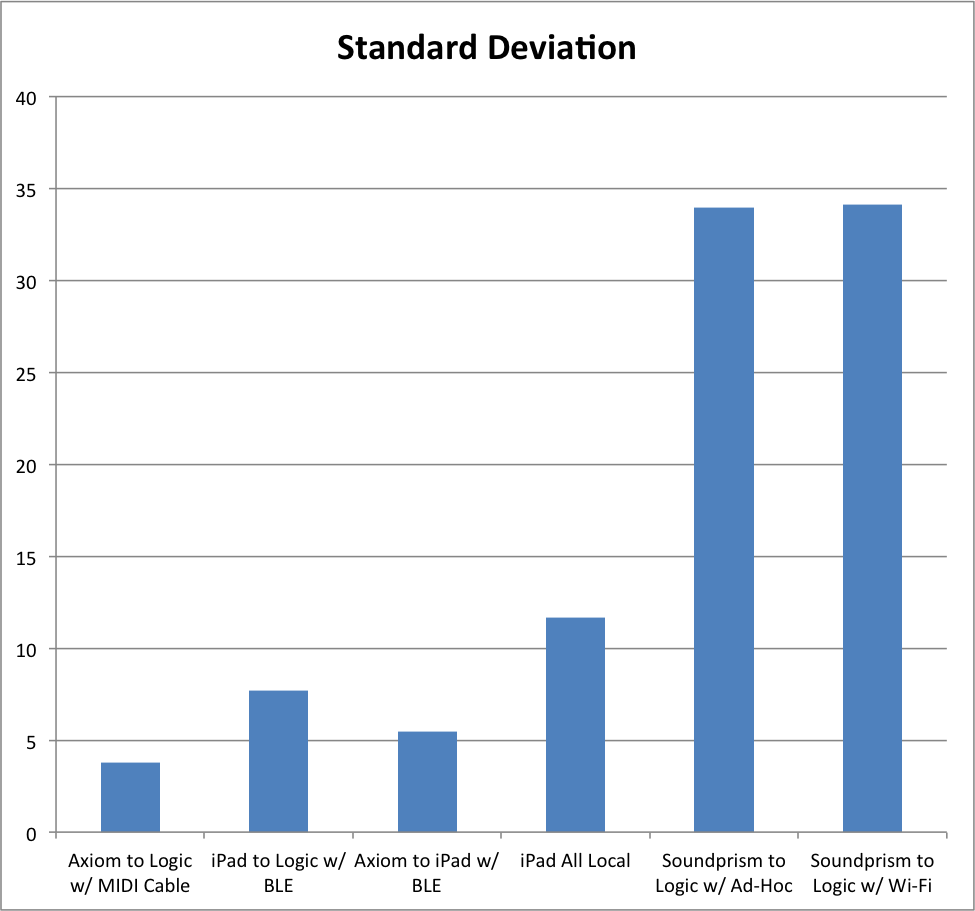

As can be seen, the latency with BLE is about 30 ms better than with the best case scenario using Core MIDI’s implementation over Wi-fi. Even more, look at the graph below, which shows the Standard Deviation (SD) of the 10 latency tests for each scenario. For those not familiar, the standard deviation gives a measure of how much the data varies around the average value. In this context, it tells us how much the latency varies from one note to the next. A high SD means the latency is all over the place, a low SD means the latency is very consistent.

The SD using BLE for MIDI is remarkably lower than with MIDI over Wi-fi. This means that the latency is more “learnable” so that musicians can adapt and not be left guessing at when the note they press will actually be played.

Versatility

Now, the fastest way for users to get up and running with MIDI over BLE is for me to release the hardware along with a graphically basic app that supports Virtual MIDI. By doing so, my app could receive and transmit MIDI messages between other iOS apps and the external piece of MIDI hardware connected. However, that’s the most basic application. Multiple BLE devices can be connected at once – and each with their own input/output data stream, so ideally, you could control, or be controlled, by several external MIDI devices. Even further, a MIDI to DMX (lighting) box would be totally possible, so iOS apps could implement full control over live venue lighting! Beyond the basic implementation to get MIDI over BLE performing the functions of MIDI over Wi-fi, there’s countless more possibilites!

Interested?

If this sounds like something you’d like to use, either as an end-user or developer wanting to make cool, multi-MIDI device apps, comment below to let me know! With enough interest, I would certainly pursue this with a kickstarter project for the hardware and a simple iOS app for interfacing with Virtual MIDI apps. If developers want to use multiple devices to start implementing cool multi-device control, I’ll consider releasing an SDK. You guys determine it, so just let me know!